What you should know about bedbugs and how to remove them easily

Bedbugs in your home? Learn how they live and how to get rid of them

Bedbugs in your home? Learn how they live and how to get rid of them

What Happens When You Eat Papaya Seeds? 5 Amazing Benefits

5 foot symptoms that might suggest blo.od sugar imbalance

Should You Drink Water Before Bed? The Truth About Your Sleep

What a Scalloped Tongue Can Reveal About Tongue Swelling

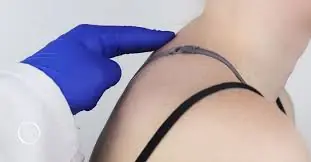

Neck Hump: A Possible Warning Sign of Bigger Health Issues — and How to Fix It

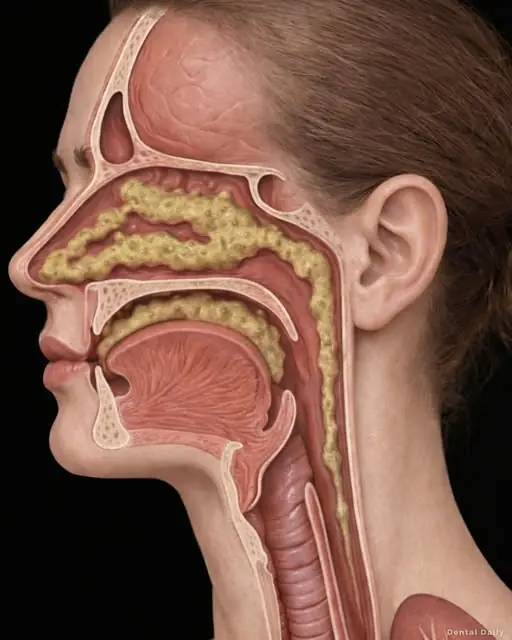

You’re Not Sick—So Why Do You Still Have Phlegm Every Day?

One simple ingredient can help refresh a dirty mop quickly.

Storing cooked rice incorrectly can lead to food safety risks.

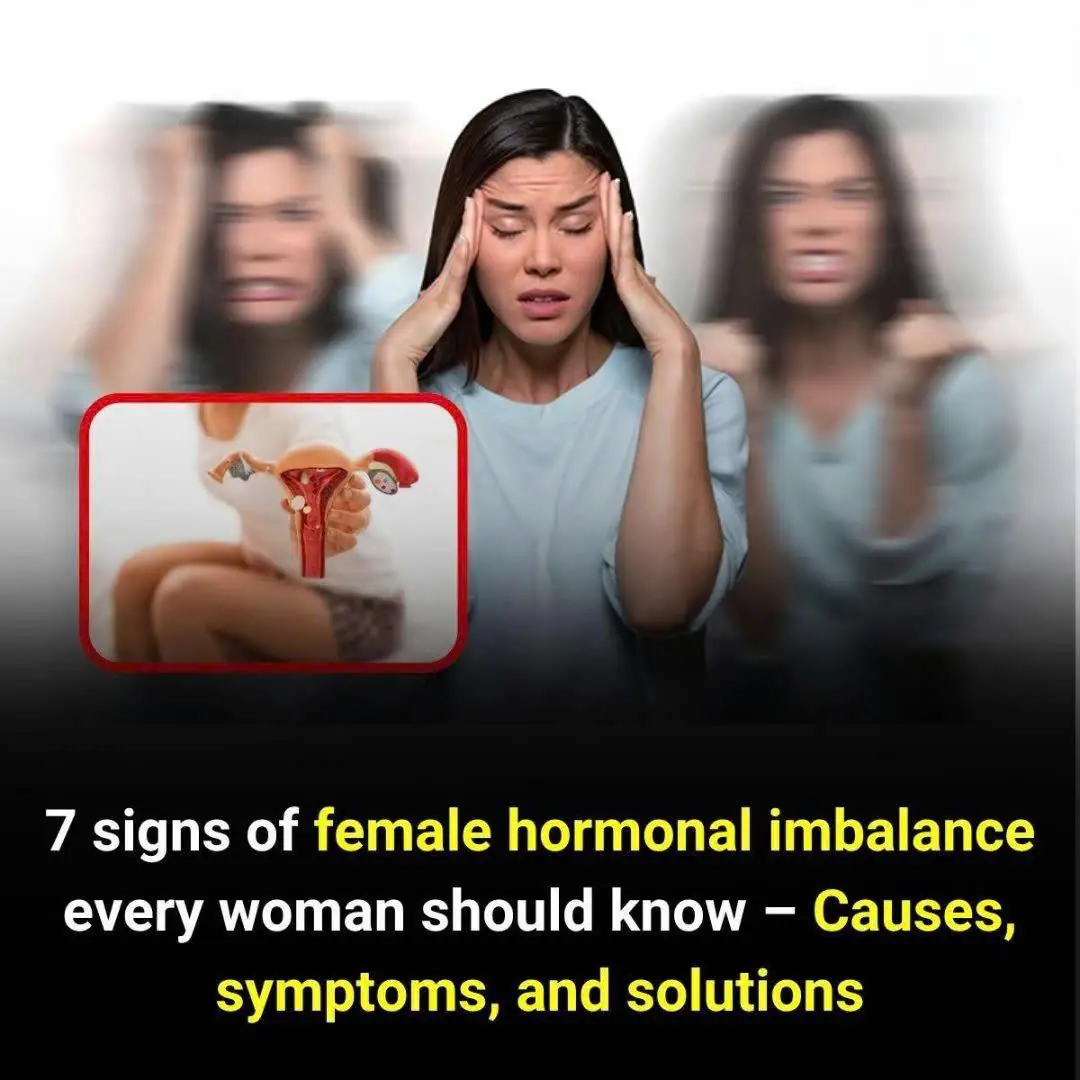

7 Signs of Female Hormonal Imbalance Every Woman Should Know

Wearing socks while sleeping might sound like a small or even strange habit, but it actually has surprising benefits for your body and your overall health — especially when it comes to improving the quality of your sleep.

Do You Sleep on Your Side? Here’s the Powerful Effect One Simple Change Can Have on Your Body

Kidney disease is a serious medical issue with an unfortunate prevalence in our society.

A simple steam trick can make oven cleaning much easier.

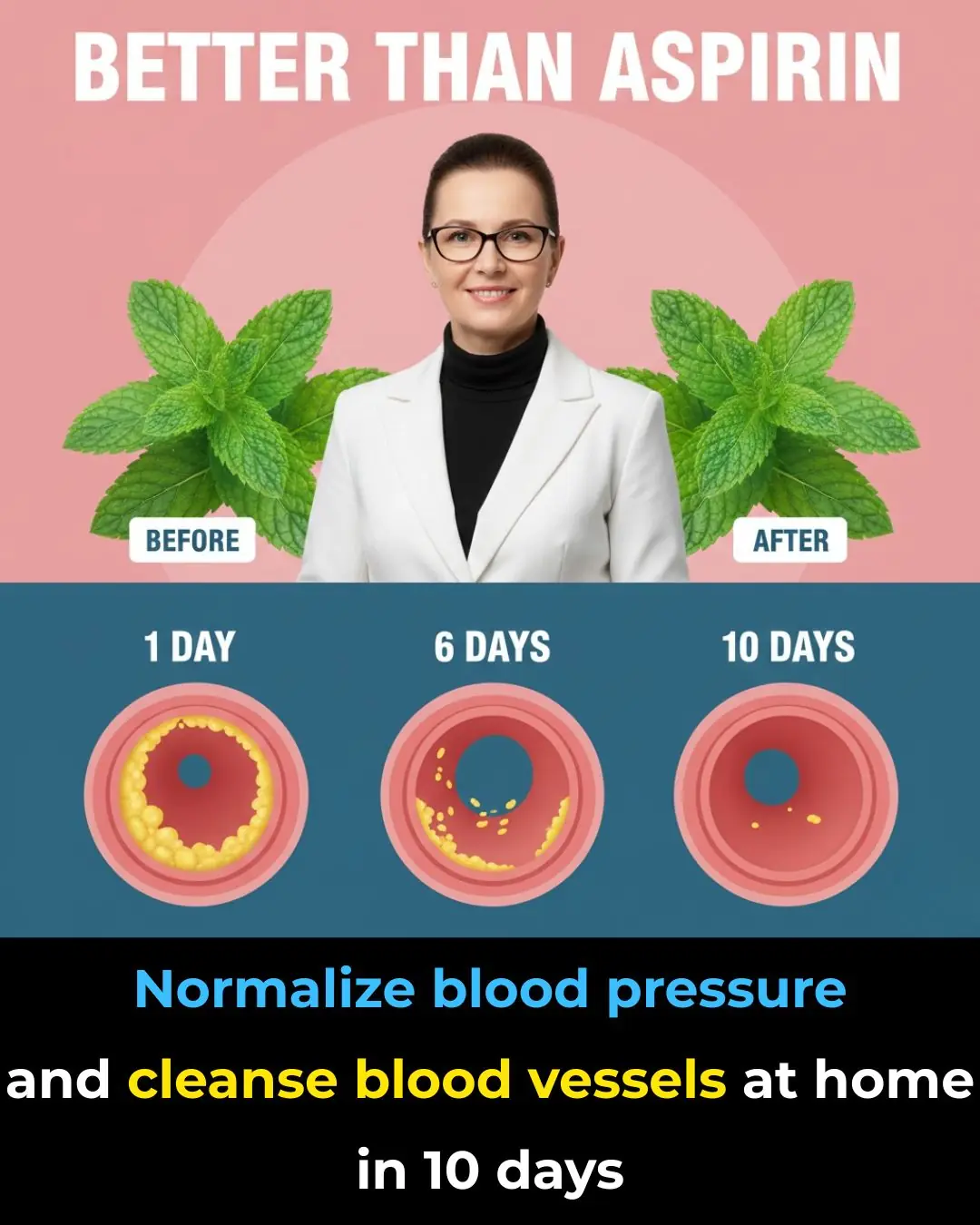

Clogged arteries are more common than most people realize — and they can silently put your heart at risk.

In recent months, a sobering trend has emerged that has left both the public and the medical community reeling: end-stage renal disease (ESRD), once considered a condition of the elderly, is now showing up in people in their 20s—and even teenagers.

Gyan Mudra, an elegant hand gesture with deep roots in yoga, has been treasured for centuries for its ability to calm the mind and sharpen focus.

Avocados are rich in nutrients that support heart and body health.

A Simple Image That Reminds Us How Perspective Shapes the Way We See the World

Attention! This Lump may appear due to something you do everyday