A modern rendering of the Utah teapot. Doug Hatfield/Wikimedia Commons

A modern rendering of the Utah teapot. Doug Hatfield/Wikimedia Commons

In 2005, Ikea conducted an experiment on its customers in the pages of its annual catalogue. Near the end of the dining room and chairs section, on page 204, the company placed a rendering of the Alfons, a boxy and inexpensive pine chair, among photographs of other seating options. The marketing department wanted to see if anyone could tell the difference between pictures of real furniture—costly and time-consuming to transport, assemble, and stage—and a 3D model, built in Autodesk’s 3ds Max design software, that could be sent anywhere with a few mouse clicks and keystrokes. No one noticed, and every year thereafter, Ikea replaced more photos with computer models. By 2014, over 75% of the single-product shots in its catalog were digital renderings. In 2020, Ikea announced its 2021 catalog would be its last. In the future, customers will be directed to an augmented reality app, Ikea Place, that lets them stage digital furniture models in their own homes with their phone’s camera.

In Image Objects: An Archeology of Computer Graphics, Jacob Gaboury, an associate professor of film and media at UC Berkeley, tries to explain how daily life came to be flooded with digital objects like Ikea’s virtual furniture, which he argues exist as both things and representations. “The phone in your pocket is a CAD file, as is the toothbrush sitting on your bathroom counter,” Gaboury writes. “The same file can be output as a visual image or physical object; it is at once both together. The shoes on your feet, the car you drove to work, the computer on which I write this, and the book you now read, each are indelibly tied to the history of computer graphics.”

Gaboury’s book is structured around a paradox often remarked upon by media theorists: that computers are not a visual medium; and the polygonal simulations that we see as graphics are, in fact, material structures that “shape the conditions of possibility for the ways we have come to design our world.” To explain how the physical and polygonal became so enmeshed, Gaboury focuses on five significant but mostly forgotten technological developments from computer history—“an algorithm, an interface, an object standard, a programming paradigm, and a hardware platform”—that together make today’s world possible.

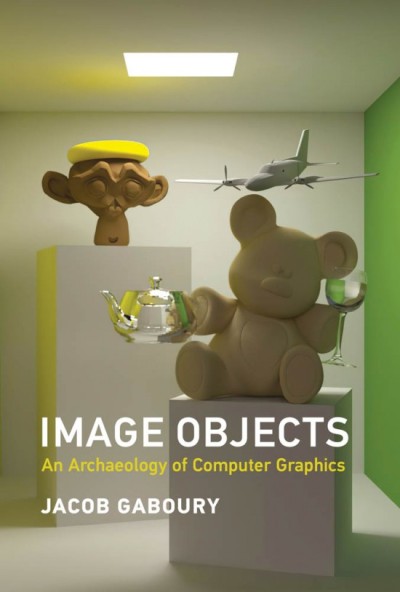

Image Objects: An Archaeology of Computer Graphics by Jacob Gaboury, MIT Press, 2021; 312 pages, 133 black-and-white phots and 20 color plates; $35 hardcover. Courtesy MIT Press

Image Objects: An Archaeology of Computer Graphics by Jacob Gaboury, MIT Press, 2021; 312 pages, 133 black-and-white phots and 20 color plates; $35 hardcover. Courtesy MIT Press

It’s a promising topic, and Gaboury guides readers through it with technical expertise. But he often seems confined by his own academic framing. Using the newish methodology of media archaeology—a scholarly practice emphasizing the analysis of “dead” media formats and how they were perceived in their own eras—Gaboury tells a weightless story about computers and the people who helped introduce them into popular culture. I finished the book with the impression that Martin Heidegger, Friedrich Kittler, and Lev Manovich were the patron saints of the computer age, not the US Department of Defense and its network of private subcontractors. Gaboury’s preference for abstruse ontological questions about the dual status of the image object occludes the historical interests and aspirations of his human subjects, making it seem like a generation of upper middle-class government contractors were mainly interested in Being and Time instead of school work, careerism, and a vague faith in the progressive benevolence of American hegemony.

To recap the history Gaboury tells: In the early 1960s a series of newly developed algorithms helped computer scientists create three-dimensional objects by drawing lines between multiple points on a computer grid, with instructions for which lines should be visible and which should remain part of the object’s unseen interior. Later, researchers created various versions of the frame buffer, a pool of instantly accessible memory where coordinates and line drawing instructions can be stored as encapsulated objects, saving the computer from having to recalculate everything from the start every time the object appears on screen.

Eager to render something other than abstract geometric shapes, different groups, most of them graduate students doing research at universities, began measuring real-world objects—hands, faces, teapots—and converting them into 3D wireframes. New algorithms were developed that created rules for how these polygonal wireframes could interact with other objects, and eventually secondary processor units were built to run alongside the computer’s main processor to handle image output. This made it possible to build elaborate graphical user interfaces, so ordinary people could manipulate computer models without having to plunge into the mathematical morass of coordinates and calculations. Soon the general public was swapping faces in Photoshop; printing zines in PostScript or Quark; downloading shareware levels of Doom and Duke Nukem; and, eventually, moving virtual Ikea couches around their apartments.

Gaboury’s account of this history feels more like a technical manual than a historical survey of a specific culture and community. Despite his superlative claims about how computers have reshaped the world, he doesn’t offer much insight into what’s so different about a world where people shop for mass-produced consumer objects using renders instead of photos. Has some unspoken revolution taken place because we no longer have to imagine what a blue love seat would look like in the corner? Have we experienced a collective loss because toothbrushes are no longer handcrafted?

Image Objects doesn’t have substantive answers to these questions, and Gaboury’s history makes it clear that many of his subjects didn’t fully know what they were doing or why. In place of a master plan, the engineers and computer scientists he discusses seem to have been working on narrowly tailored technical questions without giving much thought to what they were hoping to accomplish. When someone does have a larger goal, it often sounds bizarre, even delusional. Ivan Sutherland, who worked at the Defense Department’s Information Processing Techniques Office before founding an influential computer research program at University of Utah, thought that computers could one day “control the existence of matter.” Sutherland’s ideal computer interface would be not a screen but a room, in which the computer controlled everything, from molecules to mood lighting. “A chair displayed in such a room would be good enough to sit in,” Sutherland wrote in a 1965 essay. “Handcuffs displayed in such a room would be confining, and a bullet displayed would be fatal.”

Don’t we already have handcuffs that confine, bullets that kill, and chairs good enough to sit in? How many more chairs, real or otherwise, can one civilization need? The more explicit the end goals, the more things start to sound like a hostage situation than a progress narrative. Gaboury hints at the idea when he argues that Ikea’s AR app “controls for realism by controlling the limits of what can be simulated.” Instead of saying more, he leaves things there. But it follows that, even in the most benign time-wasters, there is the seed of authoritarian coercion knocking at the door, demanding to steer one’s dreams and desires through the prefab gridding of global consumerism. Computer graphics trick us into not just desiring our own alienation, but working to ensure it is the one reliable outcome of our interoperable, object-oriented lives.

Exterior of the Computer History Museum, Mountain View, Calif. Courtesy Computer History Museum. Photo Doug Fairbairn

Exterior of the Computer History Museum, Mountain View, Calif. Courtesy Computer History Museum. Photo Doug Fairbairn

Toward the end of Image Objects, Gaboury delivers a potted history of Silicon Graphics Inc., which for a time was one of the most accomplished and influential computer graphics companies in the world. Cofounded by James Clark, a former researcher in Sutherland’s University of Utah program, SGI created groundbreaking computer graphics for Terminator 2: Judgement Day (1991), Twister (1996), and Jurassic Park (1993), as well as the chipset for the Nintendo 64—one of the first video game consoles capable of rendering 3D graphics.

SGI’s sudden ascent took place in the early 1990s. It was an era in which super agents like Michael Ovitz and Ron Meyer shifted the balance of power in film and television industry away from studios and institutional financiers toward stars like Tom Cruise, Arnold Schwarzenegger, Julia Roberts, and Jim Carrey, who were extracting record concessions in both upfront pay and profit participation. Computer graphics offered studios the opportunity to push back against star power, creating scab celebrities out of cyclones, velociraptors, and molten pools of metal that couldn’t demand royalties or a cut of merchandising. And studios rewarded SGI for its role in helping them on their way, turning it into a $4 billion a year business.

Soon, advancements in microprocessors made SGI’s costly and cumbersome workstations seem unnecessary, while a distributed global supply chain of digital effects houses in less expensive territories had spun up to make even more complex and labor-intensive effects possible. SGI found itself unable to compete and was eventually forced into bankruptcy in 2006. Today, its former offices, famously designed to resemble a grid of pixels on a computer screen, have been converted into the Computer History Museum, the last stop on Gaboury’s “archaeological” tour.

On his visit, Gaboury is struck by how closely the office resembles what was shown in a 1996 promotional video about the company. The visit leads him to see a similar quality in graphics computation itself, the ways the machinery can invisibly preserve specific ways of doing things, even though the users and settings may come and go. “Unlike those media that defined the episteme of the twentieth century, in which history is preserved through the acts of recording the passage of time,” he writes, “graphical hardware does not preserve history; it hardens into a singular logic that executes the act of representation. Yet this historical thing it crystallizes is not change or transformation but rather the logic of procedural flows, of repetition and acceleration, a procedure crystallized.”

Though Gaboury doesn’t follow the thought through to say what exactly that crystallized procedure is being used to accomplish in social and political terms, SGI’s arc, from small but radically ambitious startup to outdated dinosaur, suggests an explanation. Computers involve us in the construction of a future in which we are obsolete, a world filled with redundancies, denatured shell versions of things that were already there. Just as the Ikea Place app was designed not to sell furniture, but to condition consumers to see what they already have as suboptimal relative to what they could have with a few taps and swipes, computer graphics show us a world where everything that already exists is somehow lacking, less than it could be. In the short term, the affective impulses they produce can feel like agency, even purpose. But eventually that purpose becomes reflexive. We begin to see ourselves as suboptimal redundancies and, just like last year’s dining room chair, as unnecessary and unwanted.

Source link : https://www.artnews.com/art-in-america/aia-reviews/image-objects-jacob-gaboury-review-1234605012